Production LLM Deployment: vLLM,FastAPI,Modal and AI Chatbot

Production Grade LLM deployment and High-Load Inferencing with vLLm, Chatbots with Memory, Local Cache of Model Weights

4.58 (6 reviews)

111

students

5.5 hours

content

Mar 2025

last update

$19.99

regular price

What you will learn

Master volume mapping to efficiently manage model storage, cut redundant data retrieval, optimize weight storage, and speed up access by using local storage str

Master deploying AI models with vLLM, handle thousands of requests, and design modular architectures for efficient model downloading and inference

Create a conversational AI chatbot using Python, integrating OpenAI's API for seamless, real-time chats with deployed language models

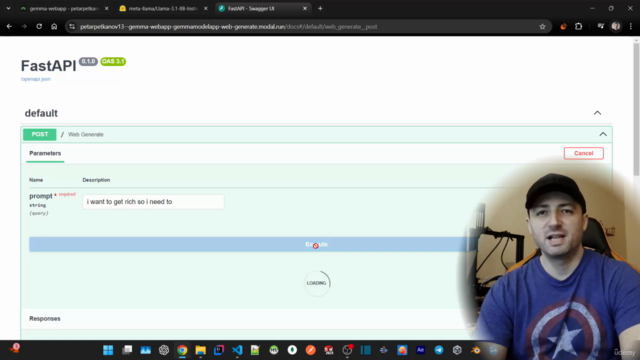

Use FastAPI and vLLM to build efficient, OpenAI-compatible APIs. Deploy REST API endpoints in containers for seamless AI model interactions with external apps

Use concurrency and synchronization for model management, ensuring high availability. Optimize GPU use to efficiently handle many parallel inference requests

Design scalable systems with efficient scaling via local model weights and storage. Secure apps using advanced authentication and token-based access control

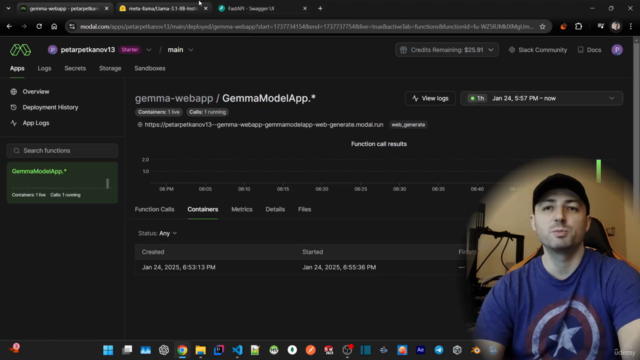

Execute GPU or CPU intensive functions of your locally running application on a Modal powerful remote infrastructure

Deploy AI Models with a single command to run on a remote infrastructure defined in your application code

Implement Web APIs: Transform Python functions to web services using FastAPI in Modal, integrating with multi-language applications effectively

Course Gallery

Loading charts...

6421265

udemy ID

24/01/2025

course created date

22/06/2025

course indexed date

Bot

course submited by